At Parafin, one of our goals is to be the fastest provider of capital to small businesses. Because we know that when small business owners are making critical financial decisions, time is of the essence. While we strive to be the fastest provider of capital, we must also ensure that we are accurate in our underwriting decisions. After all, Parafin would not be in business if we funded SMBs that couldn’t pay us back!

Achieving both speed and accuracy would be relatively simple if we underwrote businesses using a business owner’s FICO score. But, we’ve taken the stance of never asking business owners for their scores, because we know that they can create unfair bias against certain groups — minorities, women, and immigrants — and are not always the most accurate measure of a business’ health.

Parafin’s approach to underwriting is much more empirical and merit-based. We analyze thousands of data points like revenue history, cash flows, and debt load to decide whether a business is healthy enough to fund. We use classical machine learning (ML) to power our underwriting models and make decisions on which merchants to deny and fund.

Moreover, in addition to structured data points like revenue history, we also look at unstructured data points like any adverse media we could find on a given business. In the past, this involved human review. Our operations team would painstakingly search Google to attempt to catch lawsuits, fraud, or closure articles related to a given business or its owner. This human review added tens of minutes per application and slowed things down for customers significantly. And so, the compromise we made was to introduce adverse media human review only for riskier businesses with higher offer sizes. That meant that we were foregoing decision accuracy on the businesses with no human review, and therefore, taking on greater financial risk.

With the rapid advancement in large language model (LLM) capabilities and agentic AI, we no longer have to compromise between speed and recall. We can now rapidly review unstructured data across all applications, thereby reducing financial risk while giving our customers a faster and smoother application experience.

When a small business has pending lawsuits, fraud cases, bankruptcy filings, or closures, it's a major risk signal in underwriting that business for capital. There’s also the possibility that the business has overcome **their financial difficulties, and is primed for funding. Our agentic workflow helps us understand the nuances of what’s going on with each business, as it relates to adverse media, in real-time. It analyzes unstructured web inputs and applies nuanced reasoning for entity matching and risk classification.

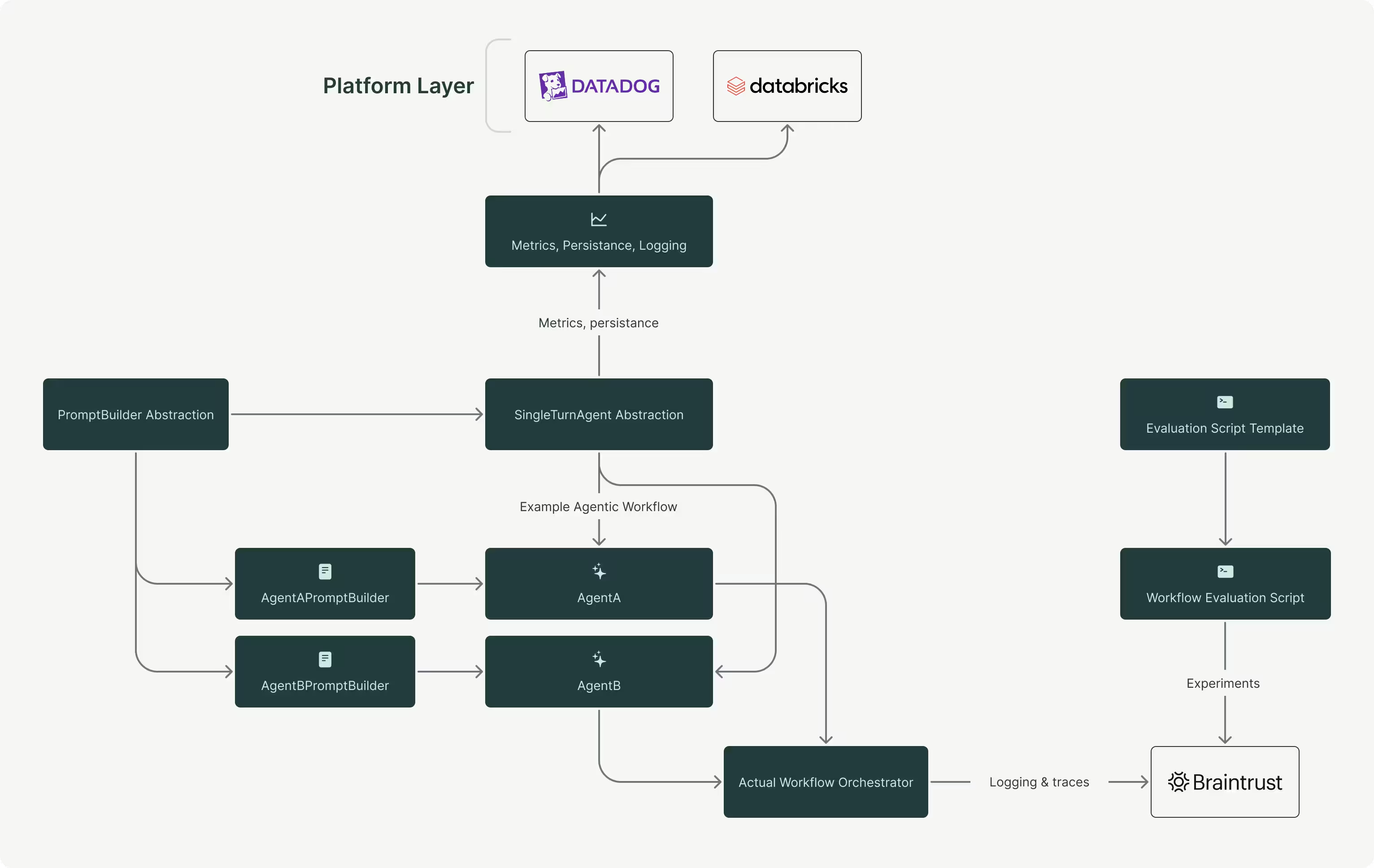

As we planned the implementation, we designed it to reuse as many components as possible for future agentic workflows while maintaining quality engineering practices.

Because agentic workflows have many use cases at Parafin beyond adverse media checks, it made sense to invest in a shared infrastructure layer that provided core platform capabilities such as observability, evaluations, auditability, prompting best practices, and more. After extensive scoping on existing tooling for LLM development, we decided this platform would best take form as a layer on top of:

We chose this approach because it provided an extremely lightweight framework that was flexible enough to customize Parafin-relevant constraints, yet still powerful enough to abstract a lot of the boilerplate that we didn’t care about. Implementation here only took a couple weeks and has returned much more in terms of time saved across maintenance and iteration cost. To dive deeper into what our layer on top composed of, here are a few key components:

Agentic workflows consist of deterministic steps, one or more of which are single turn agent calls. For example, you may want to automatically generate a numerical review score for a given restaurant, and that workflow may include deterministically scraping reviews from an API and feeding them into an LLM to give the desired sentiment score. To aid these types of use cases where the result of a single LLM prompt is core to the workflow, we’ve built a thin layer on top of the OpenAI Agents abstraction to automate metrics, loggings, and persistence to ensure our systems are observable and auditable.

Although many agent frameworks exist, the notion of “best practices” for prompt engineering is often not built in due to both the quickly evolving nature of the field, as well as the general trend towards focusing on context engineering as a whole. However this doesn’t mean it isn’t worth some small investment in trying to leverage what we can. By building a template for some currently known best practices, we’ve taken away the burden of worrying about the specifics on XML tags or multi-shot prompting and enabled developers to focus on the task they are trying to solve.

Evals are an essential component of building any production grade LLM application. Similar to any ML application, crafting a golden dataset and conducting offline experiments combined with a plan for continuous evaluation once the application is rolled out is necessary to determine the effectiveness of the system. As this is true industry wide, it made sense to leverage frameworks dedicated to supporting the scaffolding around setting up evals.

Out of the many evaluation frameworks we’ve scoped, Braintrust reigned supreme in terms of ease of implementation, support, and effectiveness for what we were looking for. Once again, we’ve created a thin wrapper on top that facilitates dataset uploads and evaluation execution. As evaluations are heavily use-case specific, supporting these basic building blocks was the desired work at a platform level.

We’ve created a Datadog dashboard that includes core metrics about agent call latencies, counts, errors, and more. Alerting and tagged logging also exist alongside.

The aforementioned single turn agent abstraction persists calls to s3 for both audibility and analytics purposes. We’ve created a simple pipeline to load this into Databricks so that our data scientists can create application-specific tables on top and extract valuable insights.

Spending time to design and build platform capabilities has been an instrumental investment. With platform level helpers, development and rollout of the adverse media agentic workflow only took a couple weeks. Time didn’t need to be wasted trying to create schemas around calling an LLM client and handing its intermediary outputs. Instead, we spent time collecting a golden dataset from historical records and iterating on context engineering by using offline evaluation feedback.

We were able to greatly improve the accuracy from a naive single agent workflow by implementing a three agent system where concerns of filtering, entity relevance, and classification were separated. Also, by providing select agents web scraping tools to enable them to fetch more context where appropriate, accuracy from initial evaluations improved from 60% to 95%, with the inaccurate matches representing expected shortcomings. Latency, cost, and time to develop were also key factors in model choice and workflow design. Alternative workflows that included separating agents per risk type or scraping sites deterministically were dropped in favor of one that best fit our current requirements.

%20(1).avif)

It’s now been several months since we shipped the adverse media agentic workflow. Since then, it has fully replaced the manual processes our operations team would’ve had to take on, making our funding process much faster. Hundreds of hours have already been saved, and we’ve caught cases that otherwise wouldn’t have been caught, due to the expansive searches conducted by the automation. As mentioned before, this workflow increased recall by a factor of 2.8x, leading to greater coverage of SMBs served by Parafin, and lower financial risk for the company.

As the current workflow has proven itself to be effective, expanding on its capabilities has been a continued ask. One possibility includes integrating new third-party data sources as either direct sources for the workflow to extract signals from, or leverage them as LLM tooling to provide better context leading to higher precision. Another includes enabling these classifications to be leveraged as features in our underwriting itself through large scale backfills.

Beyond adverse media, the platform components we built have accelerated other LLM use cases across Parafin. For example, it only took a week to develop a highly accurate transaction classification workflow that powers the underwriting of our recently launched Pay Over Time product (more on that in the near future). The systems launched on top of the platform have made debugging and iteration simple due to the monitoring and separation of concerns provided.

There is still so much exciting work to be done as we continue to build out both our LLM platform and use cases on top.

For our platform, we look to support applying LLMs for classifying hundreds of millions of transactions and deal with the scaling challenges that come with it.

For our underwriting, we’re exploring best ways to leverage LLMs for feature engineering, such as generating sentiment scores from public reviews on our businesses.

For our operational processes, agentic workflows can help replace a lot more manual work, such as assisting our servicing team by summarizing a business’s operational status with relevant sources.

All of this in service of Parafin’s mission of growing small businesses by providing seamless access to capital.

If any of the above sounds exciting to you, and you’d like to contribute to building the fastest, most accurate underwriting platform for SMBs, feel free to reach out or check out our careers page.

Author bio: Edwin Luo is a Senior Software Engineer at Parafin, where he leads LLM initiatives that power underwriting, operational workflows, and developer experience. Before joining Parafin, he held engineering roles at Datadog, Robinhood, Visa, and more.